What even is AI anyway?

Published: 26 Jun 2019

Artificial Intelligence (AI) is trendy and alluring; it has recovered in interest after several AI winters and is now harnessing millions of dollars in investment, yet it is also deemed as one of humanities biggest existential threats. How can we make sense this? Lets take a quick step backwards and ask:

What exactly is it?

This is by no means an exhaustive history, nor is it a technical deep dive. This article is a collection of historical and cultural references, anecdotes collected from my 4 years working on the Machine Ethics Podcast and my own thoughts on the question at hand. I write this both as an enthusiast and a teacher of data science (mostly with the lovely people at Decoded and Cambridge Spark). I write this now as it seems to me: never has there been such a time where asking the question what is AI? has been met with such a wide range of responses.

TL;DR - When someone refers to AI out of context, ask them: "What does it mean to you? What aspects of these technologies, goals, cultural artefacts, philosophy or belief structure are you conversing with currently? From this vantage point we should be able to have more specific, positive, less confused conversations about Artificial Intelligence." Here we will walk though some of these ideas.

Of Golems and Greeks

The recent AI: more than human exhibition at the Barbican centre in London had an excellent preamble into AI technologies by displaying cultural references to human designed creatures such as the Golem, Robots, Automata, Prometheus, Frankenstein and Japanese Kami (Dr Jennifer Whitney has a great introduction talk to the cultural artefacts of AI and Robots as well as the tension that they create here). For a couple of millennia at least, there has been cultural heritage in the idea of non-human human-like intelligence. In this instance I'm referring to the intelligence attributed to non human things that show human-like autonomy: in the story of the Golem they are essentially dumb in their inner world but respond to commands with autonomous intelligence (they understand an instruction and can enact it, but want for nothing), where as Kami spirits can be tricksters, helpful, malevolent etc... with inner worlds and goals (eluding to desires).

These stories served as inspiration, praise and fearsome tales to provide ideals, belief systems and warn of the dangers of technologies (apt perhaps in this current technological climate). In the Greek myth of Prometheus, he creates human life out of clay and the fire of the gods, though ultimately is punished for his transgression against the Pantheon. It's no wonder we have misgivings about creating new life out of inanimate stuff ourselves.

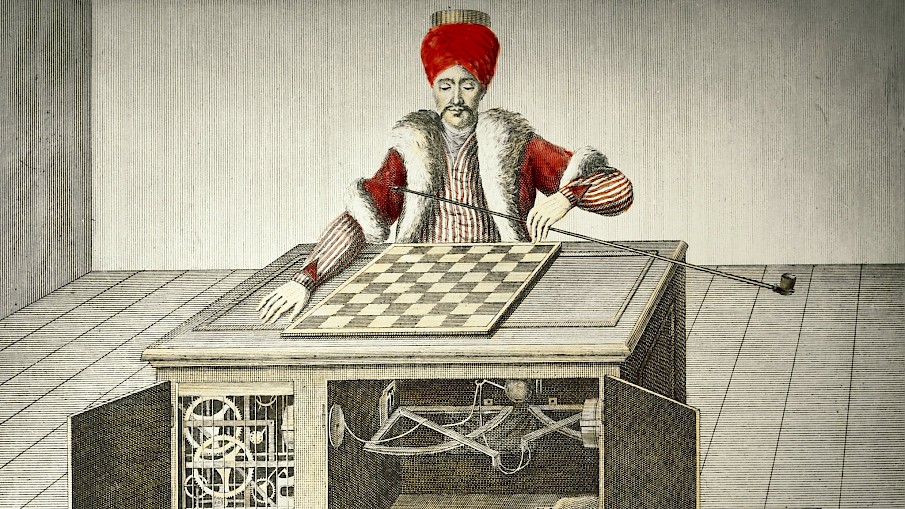

Regardless, during the the Renaissance there become a craze for more impressive Automaton. Automaton came in all shapes and sizes with two of the most famous comprising a goose that seemed to eat and lay eggs, and a humanoid chess player. Automaton were about imitating nature through mechanic design–entertainment for the aristocracy. The Automaton were almost taboo, titillating, they hinted at a manmade autonomy, a sense of godly power, and indeed, a designed inner world. Also elaborated on at the Barbican exhibition, these realisations of the idea of intelligent stuff often appeared somewhere near the Uncanny valley, creating slightly repulsed responses from onlooker if not already reproachful with religious beliefs. It could be said to be cognitively jarring to see something obviously inanimate behaving animately. It simply isn’t the world we know.

Of Monsters and Robots

Frankenstein, Metropolis, iRobot, 2001, Astroboy (Mighty Atom), Ghost in the Shell, Moon (notable for not conforming to the now troupe of AI rebelling against its human overlords). Cultural artefacts enable us to lead conversations with some familiarity, to discuss concepts and indeed their repercussions. This is most evident in the artefacts discussing AI themes in the 20 century. It is now a well worn trope in Science Fiction that in the future there will be Robots, AGI's (artificial general intelligences), or similar biological based man-made creation, and most of them want us dead! From Frankenstien's monster to 2001's Hal, Metropolis' robot Maria and Terminator's... well Terminators, they all pose as antagonists in some way to humankind (though this reading is extremely simplified). Few mainstream films paint future AGI's as beneficent or simply passive–I can think of Moon and Interstellar off the top of my head (also enjoyable films), other than kids films.

It is apparent that our technologies change how we live and many conversations around direction of our endeavours are portrayed in fictions. A well known fiction holds the basis for the conversation in the academic field of Machine Ethics: Isaac Asimov's iRobot. IRobot is the door-opener for many on the conversation of endowing machines with ethical reasoning. Though again it is a fiction demonstrating how NOT to proceed, it gives us a way of thinking about machines that have some amount of autonomy to complete tasks–as Nick Bostrom theorises: we'd better be bloody sure we instruct those tasks correctly or things might come back to haunt us. I feel Nick Bostrom's paperclip thought experiment must have been influenced by Asimoz's work, where a super-intelligent AI could be given a task that ultimately would be more efficiently conducted if we didn’t exist or we are subordinate (one could also think of the Matrix film here). Most of Asimoz's Robots and, indeed, the paperclip maximiser don't necessitate a sentient AI (with an inner world), these machines have abilities to reason and enact instructions to a super human level, a kin to a super golem. It is perhaps the realm of philosophy to help deduce whether there is a requirement to have inner consciousness to achieve super human capabilities, that the AI's don't necessarily know why they're doing the action, or that it might be.

Let's lean on Science Fiction again to ponder: without feelings we get iRobot (though not Bicentennial Man), Paperclip maximisers and HAL. With Feelings we get Ghost in the Shell, Astroboy, and Marvel's Vision and Ultron...

Alan Turing and John McCarthy

In the beginning of the 20th century computers graduated from mechanical curiosities or banks of human workers to intricate encryptors then finally to a industrial revolution denoting the information age. Though there is lots we could talk about here, it was perhaps Alan Turing who’s ideas shone brightest through this transition. Though, John McCarthy hadn’t yet coined the term Artificial Intelligence after the success of cybernetics, Turing had already postulated the universal Turing machine (that could compute any computable instruction) and the Turing Test.

The Turing Test is a demonstration of the idea that at some point computers will be so capable (trying not to say “intelligent” here) that they could even trick us (presuming you’re a human reading this) to believe they are human–a prophecy if you like. This idea has resonated through the decades, first resounding with the famous Dartmouth Workshop of 1956 giving birth to the term Artificial Intelligence, which ignited academic appetite for a discipline dedicated to the notion / creation of human like thinking and acting capabilities.

Marrying the pursuit of AI with the already popular ideas of Robotics, automation (Ford Automotive famously the icon for early automation), and the Automaton, this brings forth a potent mix of ideas that indeed also gave birth to Terminator, iRobot, 2001 and many many other cultural fictions of a future with Artificial Intelligence. Much boom and bust has occurred over the decades following this new intriguing field of Electrical Engineering, Philosophy, Computer Science and Mathematics (and as argued in my podcast, possibly the need for more social sciences and even regulation).

The glorious and the mundane

Harnessing computational progress has been our preoccupation over the last several decades. More, faster, cleverer. We have toppled chess champions by brute-force computation. Gained almost omnipotent communication and informational potential that slots into our pockets. We've seen a step change in algorithmic thinking to beat the best human GO players. It is no wonder that the ideas of AI existential risk are high on many peoples minds.

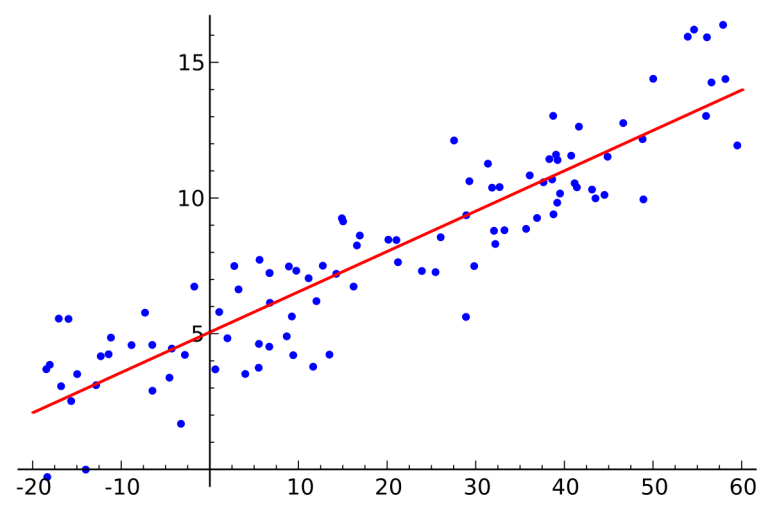

The technology is surely glorious, but has also brought with it many new downsides too. For our purposes much of the newness in AI research and recent conversation has been due to Machine Learning algorithms and greater computer speeds to run them. Again ML is one of those slippery terms, for us they are statistical modelling algorithms, used to create predictions. Some of them can be understood by hand, others are extremely unclear how they infer their outputs. AI in many jobs today can be described as the mundane (in terms of the lack of magic or wonder) use of ML frameworks to predict an outcome from data. Whether you're eligible for a loan or not, whether that email is spam, or what you’re likely to buy next. Artificial intelligence for many has been conflated in this way as it is both practical and close at hand. There are Universities, online learning courses, conferences, companies dedicated to making better predictions from incoming data.

Though the philosopher in you might say: “well thats not a world away from humans turning energy and information into action” it still seems a world away from what we now call AGI (artificial general intelligence - a system capable of reasoning in many domains). It is also not completely clear how the road to AGI may be trodden and what will happen when we get there..?! AI encompasses ideas of ML algorithms but also the road to AGI which could be created from new breakthroughs in ML (or my feeling is a combination of ML genetic algorithms and randomness and a lot of processing power).

What do I mean when I say AI?

I hope through reading this that you can see there is a melting pot of ideas and heritage in Artificial Intelligence and its component ideas. What do I see in my mind when people ask me about AI? Well I see HAL from 2001 a space odyssey, complex logical predicaments from iRobot, Ghost in the Shell internet consciousnesses, and more besides. However, how do I think about AI? I concern myself mostly with genetic algorithms, Machine Learning and state machines and trees for games. Why? Because they are both close at hand, interesting and hugely beneficial. Whether AGI, super-intellegence is a boon or bane is totally unclear currently.

Quotes from the podcast in response to the question: what is AI to you?

- ... doing the right thing at the right time. AI being an artificial version - Rob Wortham

- ... a moving of the goal posts, what is AI is constantly moving - Cosima Gretton

- Not a technology but a goal - Greg Edwards

- Soon as it works no-one calls it AI anymore ... zero intelligence, amazing capabilities - Luciano Floridi

- ... achieving goals - Christopher Noessel

- Something that can generalise - Damien Williams

- Machine Learning, you get an input, it’s processed by software and you get both an output but also an update to the software - Miranda Mowbray